SVM - 硬或软的利润? [英] SVM - hard or soft margins?

问题描述

给定一个线性可分的数据集,是不是一定最好使用5号硬保证金SVM过软保证金SVM?

Given a linearly separable dataset, is it necessarily better to use a a hard margin SVM over a soft-margin SVM?

推荐答案

我希望软利润率SVM是更好,即使训练数据集是线性可分。其原因是,在硬余量的SVM,单离群可以确定边界,这使得分类过于敏感,在数据中的噪声

I would expect soft-margin SVM to be better even when training dataset is linearly separable. The reason is that in a hard-margin SVM, a single outlier can determine the boundary, which makes the classifier overly sensitive to noise in the data.

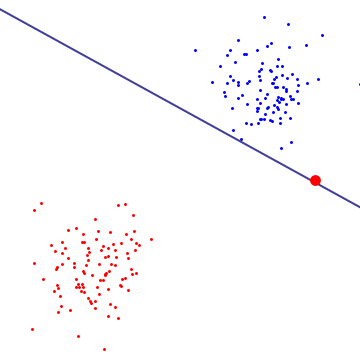

在下面的图中,一个红色的异常本质上决定了边界,这是过度拟合的标志

In the diagram below, a single red outlier essentially determines the boundary, which is the hallmark of overfitting

要得到一个什么样的软利润率SVM是干什么的,最好是看它的双重配方,在那里你可以看到它具有相同的利润率最大化目标(利润率可能是负)的硬感保证金SVM,但有一个附加的约束与支持向量由C.界相关联的每个拉格朗日乘数本质上这边界的决策边界上的任何单点的影响,对于推导,见命题6.12 Cristianini /肖 - 泰勒的简介支持向量机和其他基于内核的学习方法。

To get a sense of what soft-margin SVM is doing, it's better to look at it in the dual formulation, where you can see that it has the same margin-maximizing objective (margin could be negative) as the hard-margin SVM, but with an additional constraint that each lagrange multiplier associated with support vector is bounded by C. Essentially this bounds the influence of any single point on the decision boundary, for derivation, see Proposition 6.12 in Cristianini/Shaw-Taylor's "An Introduction to Support Vector Machines and Other Kernel-based Learning Methods".

结果是软保证金SVM可以选择决策边界,有非零训练错误,即使数据集是线性可分的,而不太可能过度拟合。

The result is that soft-margin SVM could choose decision boundary that has non-zero training error even if dataset is linearly separable, and is less likely to overfit.

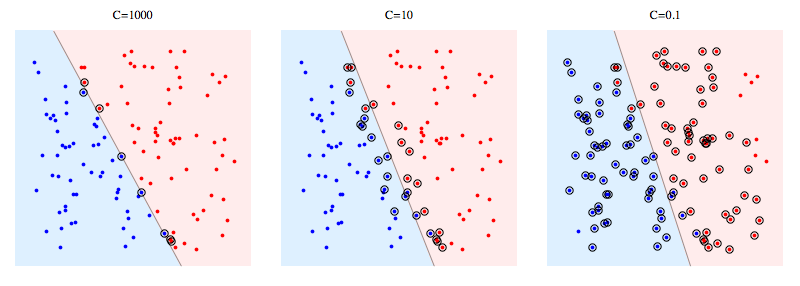

下面是一个使用LIBSVM的合成问题的例子。圆圈点表示支持向量。你可以看到,减少c使分类牺牲线性可分,以获得稳定的,在一定意义上,任何一个数据点的影响,现在用C为界。

Here's an example using libSVM on a synthetic problem. Circled points show support vectors. You can see that decreasing C causes classifier to sacrifice linear separability in order to gain stability, in a sense that influence of any single datapoint is now bounded by C.

支持向量含义:

有关硬保证金SVM,支持向量是这是在边际上的观点。在上面的图片中,C = 1000是pretty的接近硬保证金SVM,您可以看到圆圈点是那些会触动保证金(保证金几乎是0的画面,所以它本质上是一样的分离超平面)

For hard margin SVM, support vectors are the points which are "on the margin". In the picture above, C=1000 is pretty close to hard-margin SVM, and you can see the circled points are the ones that will touch the margin (margin is almost 0 in that picture, so it's essentially the same as the separating hyperplane)

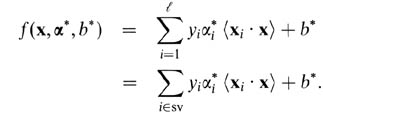

有关软保证金SVM,这是easer解释他们在双变量的条款。您的支持向量predictor双变量而言是下面的函数。

For soft-margin SVM, it's easer to explain them in terms of dual variables. Your support vector predictor in terms of dual variables is the following function.

下面,阿尔法和b是在训练过程中发现的参数,喜的,易建联的是你的训练集,x是新的数据点。支撑载体是从训练集的数据点它们被包括在predictor,即,那些具有非零的α参数。

Here, alphas and b are parameters that are found during training procedure, xi's, yi's are your training set and x is the new datapoint. Support vectors are datapoints from training set which are are included in the predictor, ie, the ones with non-zero alpha parameter.

这篇关于SVM - 硬或软的利润?的文章就介绍到这了,希望我们推荐的答案对大家有所帮助,也希望大家多多支持IT屋!